📄 Paging - Complete Interview Guide

Master non-contiguous memory allocation with visual explanations

❌ The Problem: External Fragmentation

Memory Before Paging

Available Memory

1KB

Used

1KB

❌

Need to Load

2KB Process

External Fragmentation Problem:

• Total free memory = 2KB

• Process needs = 2KB

• But memory is scattered!

• Cannot allocate even though enough total space exists

Traditional Solution:

• Compaction (moving processes)

• But this is expensive and slow!

Paging Solution:

• Break process into smaller pieces

• Each piece fits in available holes

• Total free memory = 2KB

• Process needs = 2KB

• But memory is scattered!

• Cannot allocate even though enough total space exists

Traditional Solution:

• Compaction (moving processes)

• But this is expensive and slow!

Paging Solution:

• Break process into smaller pieces

• Each piece fits in available holes

✅ The Solution: Paging

After Paging

Break Process into Pages

Page 0

1KB

1KB

Page 1

1KB

1KB

✅

Fit in Available Frames

Page 0

Used

Page 1

Paging Key Concepts:

• Pages: Fixed-size blocks of logical memory

• Frames: Fixed-size blocks of physical memory

• Page Size = Frame Size (typically 4KB)

• Non-contiguous: Pages can be anywhere in memory

Benefits:

• No external fragmentation

• No compaction needed

• Better memory utilization

• Flexible allocation

• Pages: Fixed-size blocks of logical memory

• Frames: Fixed-size blocks of physical memory

• Page Size = Frame Size (typically 4KB)

• Non-contiguous: Pages can be anywhere in memory

Benefits:

• No external fragmentation

• No compaction needed

• Better memory utilization

• Flexible allocation

🎮 Interactive Paging Demo

Click buttons to see how paging works:

📄 Logical Memory (Pages)

Click "Load Process" to see pages

💾 Physical Memory (Frames)

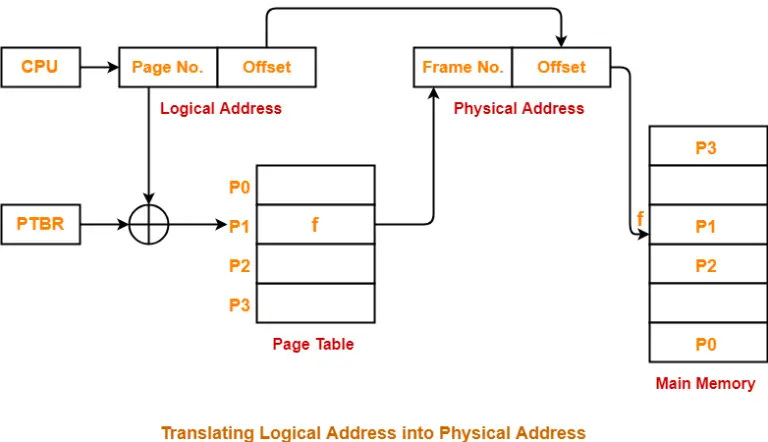

🔄 Address Translation (Interview Favorite!)

Logical Address Structure

Page Number (p)

Index into Page Table

Higher bits

Index into Page Table

Higher bits

+

Page Offset (d)

Offset within Page

Lower bits

Offset within Page

Lower bits

Formula:

Physical Address = Frame Number × Page Size + Offset

Example:

Logical Address = 1024 (Page 1, Offset 0)

If Page 1 → Frame 3, Page Size = 1024

Physical Address = 3 × 1024 + 0 = 3072

Physical Address = Frame Number × Page Size + Offset

Example:

Logical Address = 1024 (Page 1, Offset 0)

If Page 1 → Frame 3, Page Size = 1024

Physical Address = 3 × 1024 + 0 = 3072

🔧 Address Translation Steps

1

Extract Page Number

CPU generates logical address → Split into page number and offset

CPU generates logical address → Split into page number and offset

2

Check Page Table

Use page number as index to find corresponding frame number

Use page number as index to find corresponding frame number

3

Calculate Physical Address

Combine frame number with offset to get physical address

Combine frame number with offset to get physical address

4

Access Memory

Use physical address to access actual memory location

Use physical address to access actual memory location

⚡ TLB - Making Paging Fast

Problem: Too Many Memory Accesses

1

Access Page Table (Memory Access #1)

2

Access Actual Data (Memory Access #2)

Double the memory accesses = Half the speed!

TLB Solution:

• Translation Lookaside Buffer

• Hardware cache for page table entries

• Stores recent page → frame mappings

• Super fast lookup (parallel to page table)

TLB Hit: Found in TLB → 1 memory access

TLB Miss: Not in TLB → 2 memory accesses

Typical TLB Hit Rate: 90-99%

• Translation Lookaside Buffer

• Hardware cache for page table entries

• Stores recent page → frame mappings

• Super fast lookup (parallel to page table)

TLB Hit: Found in TLB → 1 memory access

TLB Miss: Not in TLB → 2 memory accesses

Typical TLB Hit Rate: 90-99%

🎯 TLB in Action

TLB Cache Contents

Page 0 → Frame 3

✅ Hit

Page 1 → Frame 7

✅ Hit

Page 2 → Frame 1

✅ Hit

Page 5 → Frame 9

✅ Hit

ASID (Address Space Identifier): Each TLB entry includes process ID to avoid conflicts between different processes

📊 Page Table Types (Advanced Interview Topic)

| Type | Description | Pros | Cons | Use Case |

|---|---|---|---|---|

| Single-Level | Simple array mapping | Fast, simple | Large memory overhead | Small address spaces |

| Multi-Level | Hierarchical structure | Space efficient | Multiple memory accesses | Large address spaces |

| Inverted | One entry per frame | Fixed size | Search overhead | Memory-constrained systems |

| Hashed | Hash table based | Good for sparse spaces | Hash collisions | Sparse address spaces |

⚖️ Paging vs Segmentation (Interview Question)

📄 Paging

- Fixed Size: All pages same size

- No External Fragmentation: Eliminates this problem

- Simple: Easy to implement

- Protection: Page-level protection

- Sharing: Pages can be shared

📑 Segmentation

- Variable Size: Segments of different sizes

- Logical Units: Matches program structure

- Better Protection: Segment-level protection

- Sharing: Logical units can be shared

- External Fragmentation: Still a problem

📈 Performance Calculations (Interview Math)

Effective Access Time (EAT):

EAT = (1 + α) × ma

Where: α = page fault rate, ma = memory access time

With TLB:

EAT = α × (TLB access time + memory access time) + (1-α) × (TLB access time + 2 × memory access time)

Where: α = TLB hit rate

Example:

TLB hit rate = 90%, TLB access = 1ns, Memory access = 100ns

EAT = 0.9 × (1 + 100) + 0.1 × (1 + 200) = 111.1ns

EAT = (1 + α) × ma

Where: α = page fault rate, ma = memory access time

With TLB:

EAT = α × (TLB access time + memory access time) + (1-α) × (TLB access time + 2 × memory access time)

Where: α = TLB hit rate

Example:

TLB hit rate = 90%, TLB access = 1ns, Memory access = 100ns

EAT = 0.9 × (1 + 100) + 0.1 × (1 + 200) = 111.1ns

🎯 Top Interview Questions & Answers

Q1: Why is paging better than contiguous allocation?

A: Paging eliminates external fragmentation by allowing non-contiguous allocation. Processes can be loaded even if no single large contiguous block is available, improving memory utilization and reducing need for compaction.

A: Paging eliminates external fragmentation by allowing non-contiguous allocation. Processes can be loaded even if no single large contiguous block is available, improving memory utilization and reducing need for compaction.

Q2: How does TLB improve paging performance?

A: TLB is a hardware cache that stores recent page-to-frame mappings. Without TLB, each memory access requires 2 memory accesses (page table + actual data). With TLB hit, only 1 memory access is needed, nearly doubling performance.

A: TLB is a hardware cache that stores recent page-to-frame mappings. Without TLB, each memory access requires 2 memory accesses (page table + actual data). With TLB hit, only 1 memory access is needed, nearly doubling performance.

Q3: What happens on a TLB miss?

A: On TLB miss, system accesses page table in memory, retrieves frame number, updates TLB with this mapping, then accesses actual data. This results in 2 memory accesses but improves future accesses to the same page.

A: On TLB miss, system accesses page table in memory, retrieves frame number, updates TLB with this mapping, then accesses actual data. This results in 2 memory accesses but improves future accesses to the same page.

Q4: How do you calculate physical address from logical address?

A: Split logical address into page number and offset. Use page number as index into page table to get frame number. Physical address = (frame number × page size) + offset.

A: Split logical address into page number and offset. Use page number as index into page table to get frame number. Physical address = (frame number × page size) + offset.

Q5: What is internal fragmentation in paging?

A: Internal fragmentation occurs when a process doesn't fully utilize its last page. For example, if page size is 4KB and process needs 10KB, it gets 3 pages (12KB), wasting 2KB in the last page.

A: Internal fragmentation occurs when a process doesn't fully utilize its last page. For example, if page size is 4KB and process needs 10KB, it gets 3 pages (12KB), wasting 2KB in the last page.

Q6: Why do we need ASID in TLB?

A: ASID (Address Space Identifier) prevents conflicts between different processes. Without ASID, TLB would need to be flushed on every context switch. ASID allows TLB to hold entries for multiple processes simultaneously.

A: ASID (Address Space Identifier) prevents conflicts between different processes. Without ASID, TLB would need to be flushed on every context switch. ASID allows TLB to hold entries for multiple processes simultaneously.

📝 Key Revision Points

Paging Basics: Fixed-size pages (logical) map to fixed-size frames (physical). Page size = Frame size (typically 4KB).

Address Translation: Logical address = Page number + Offset → Physical address = Frame number + Offset

TLB: Hardware cache for page table entries. Typical hit rate 90-99%. Reduces memory accesses from 2 to 1.

Page Table Types: Single-level (simple), Multi-level (space efficient), Inverted (fixed size), Hashed (sparse